A/B Testing Methodology

Let’s start by going into the differences between multi-armed bandit tests and traditional 50/50 split A/B tests so that we’re all on the same page. All A/B tests involve pitting two or more versions of a page against each other.

Classic 50/50 Split AB Testing

Let’s say you have a Control and one Variant. In a typical A/B test, your traffic will be split evenly until you turn off the test. If the Control is performing with an 80% conversion rate, but the Variant only has a 20% conversion rate, 50% of your traffic will still be sent to the variant that is performing poorly.

Multi-Armed Bandit Test

In a multi-armed bandit experiment, your goal is to find the most optimal choice or outcome while also minimizing your risk of failure. This is accomplished by presenting a favorable outcome more frequently as a test progresses.

To put this into context, imagine a casino where you’re facing a row of slot machines. Your aim is to discover the machine that has the highest chance of winning big while wasting the least amount of money (and time) in the process. Your challenge then is to be able to exploit winning slots while also trying out new machines in the hopes that they’ll actually deliver an even higher payout. This is why the Crazy Egg A/B testing algorithm constantly adjusts visitor traffic to minimize losses with losing “machines” so you can quickly see positive improvements in your page performance.

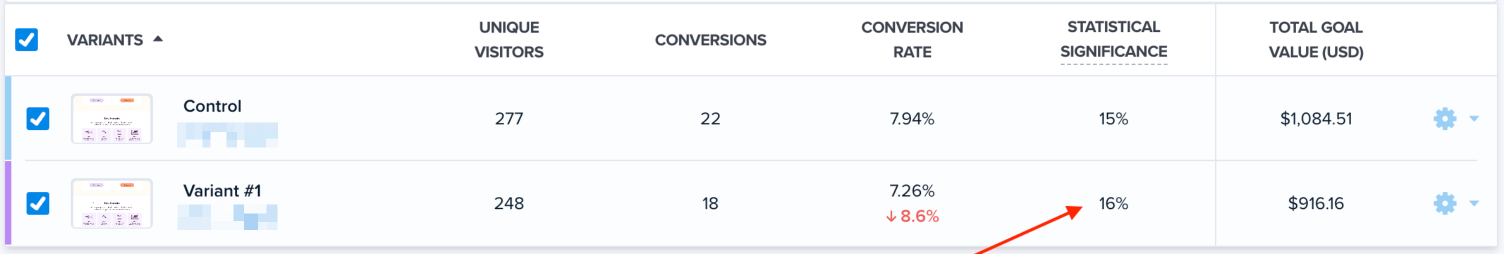

With a multi-armed bandit approach, the conversion rates of your variants are constantly monitored. This is done because an algorithm is used to determine how to split the traffic to maximize your return on investment. The result is that if the Control is performing better, more traffic will be sent to the Control.

Each variant you create for an A/B test will display weight, creation date, number of views, and number of conversions. We look at the number of views, conversions, and creation date to decide the weight (what percentage of visitors) see the variant. These weights are adjusted daily based on the previous cumulative results.

Still, have doubts about the success of Multi-Armed Bandit A/B Testing Methodology?

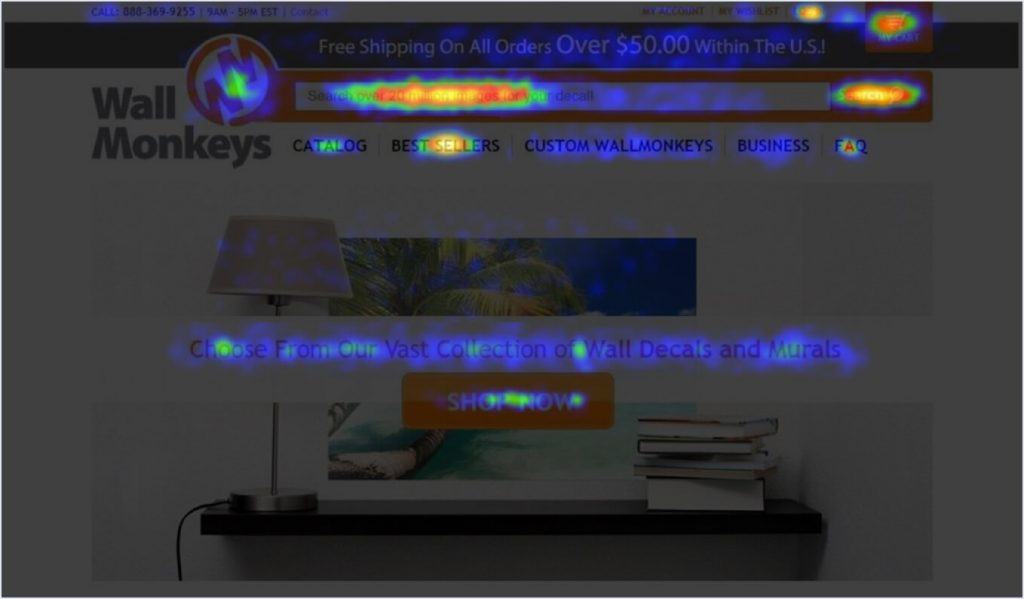

Ask E-Commerce business Wall Monkey! They increased their conversion rate by 550% after analyzing their Snapshot Reports and testing their theories with our AB Tester. The results don’t lie.

The truth of the matter, all AB Test methodologies have sound mathematical backing. It’s a matter of preference. And our customers have said, they want a method that is sound and will provide them with an idea of what is working and what is not quickly. Multi-Armed Bandit AB Testing does this.

Why Does Statistical Significance Fluctuate in Multi-Arm Bandit Tests?

Statistical significance in an A/B test isn’t a fixed value—it evolves as more data comes in. This fluctuation is even more noticeable when using the Multi-Arm Bandit (MAB) methodology because of how traffic is dynamically allocated.

Key Reasons for Fluctuation:

- Dynamic Traffic Allocation:

- In a traditional A/B test, traffic is evenly split throughout the test.

- In MAB, traffic is dynamically shifted towards better-performing variants over time. This means each variant’s traffic isn’t consistent, which can cause temporary swings in statistical significance as the algorithm adjusts.

- Sample Size:

- Early in the test, sample sizes are smaller, and the results are more prone to volatility.

- As more data is collected, statistical significance tends to stabilize.

- Natural Variability in Conversions:

- Conversion events are not perfectly consistent; random variations (e.g., seasonality, external factors) can temporarily affect results.

What to Expect:

- Early fluctuations are normal, especially with MAB.

- Over time, as more data is gathered and the algorithm settles on optimal traffic allocation, statistical significance is more likely to stabilize.